Checklist for Building a Cloud Data Engineer Portfolio

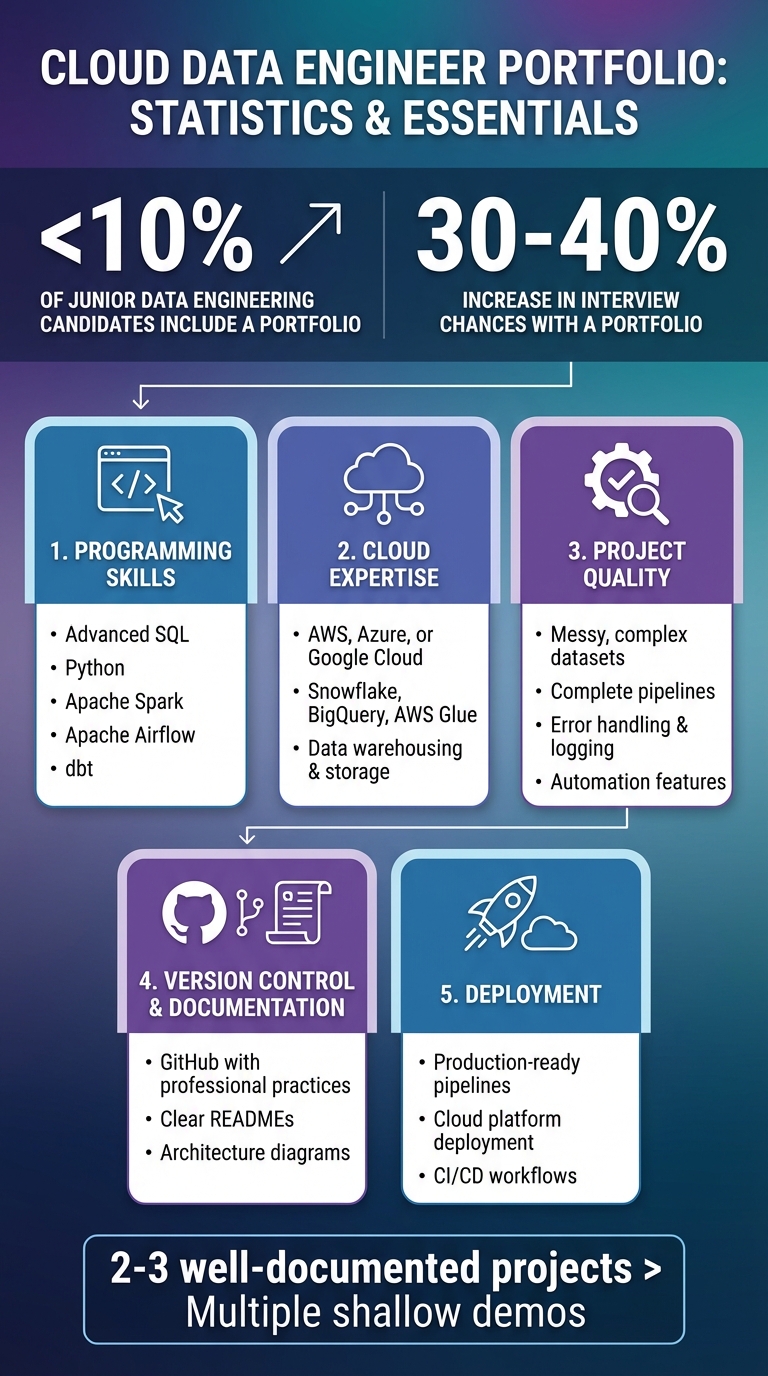

Less than 10% of junior data engineering candidates include a portfolio in their applications. Yet, having one can significantly boost your chances of landing interviews - by 30–40%. A portfolio doesn’t just list your skills; it proves them. It shows you can build, deploy, and maintain functional data systems, which is exactly what employers are looking for in 2026.

Here’s what to include in your portfolio:

- Programming Skills: Advanced SQL, Python, Apache Spark for processing large datasets, and tools like Apache Airflow and dbt for orchestration and transformations.

- Cloud Expertise: Focus on AWS, Azure, or Google Cloud, showcasing storage, data warehousing, and processing services like Snowflake, BigQuery, or AWS Glue.

- Project Quality: Use messy, complex datasets and build complete pipelines with features like error handling, logging, and automation.

- Version Control & Documentation: Use GitHub with professional practices, clear READMEs, and architecture diagrams.

- Deployment: Showcase production-ready pipelines deployed on cloud platforms with CI/CD workflows.

Focus on quality over quantity - 2–3 well-documented projects with measurable results will stand out. Update your portfolio regularly with new skills, professional certifications, and projects. This combination of practical experience and technical depth can help you stand out in a competitive job market.

Cloud Data Engineer Portfolio Impact Statistics and Essential Components

The Cloud Data Engineer’s Portfolio Project Blueprint: From Design to Deployment | Uplatz

sbb-itb-61a6e59

Skills and Tools to Include

Show that you can manage the complete data lifecycle - from data ingestion to transformation and deployment. Highlight the languages, frameworks, and platforms hiring managers look for.

Programming and Data Processing Skills

SQL is a must-have. It’s the backbone for querying, transforming, and validating data in cloud warehouses. As Zach Wilson puts it:

"Avoiding SQL is the same as avoiding a job in data engineering".

Go beyond the basics. Showcase your ability to use advanced SQL techniques like complex joins, window functions, and common table expressions.

Python dominates when it comes to building pipelines and automating workflows. Highlight how you’ve used Python for tasks like API integration, data processing with Pandas, or writing integration code. Additionally, Apache Spark, particularly PySpark, is the go-to for distributed processing at scale. Include a project where you’ve worked with large datasets - millions of records - to demonstrate your understanding of parallelization and partitioning.

For orchestration, Apache Airflow is the industry favorite for scheduling and monitoring workflows. Include examples of Directed Acyclic Graphs (DAGs) that handle dependencies, retries, and error handling. Pair this with dbt (Data Build Tool) to showcase your ability to handle SQL-based transformations and modeling in cloud warehouses. If you’ve worked with real-time data, include Apache Kafka to demonstrate your experience with event-driven architectures for streaming ingestion. While Java and Scala aren’t always required, they’re valuable for roles focused on high-performance Spark or Flink.

Once you’ve demonstrated your programming expertise, shift focus to showcasing your experience with cloud platforms.

Cloud Platform Experience

Hiring managers want to see that you can deploy and manage pipelines in production. AWS is the most common platform, but Azure is popular in enterprise environments, and Google Cloud Platform (GCP) adds value to multi-cloud portfolios. You don’t need to master all three - focus on one or two and build deep expertise.

Start with storage services like Amazon S3, Azure Data Lake Storage Gen2, or Google Cloud Storage to show you understand data lake fundamentals. Then, demonstrate your knowledge of data warehousing with tools like Snowflake (which works across platforms), Amazon Redshift, Google BigQuery, or Azure Synapse Analytics. For data processing, highlight your experience with AWS Glue, Amazon EMR, or Databricks for running Spark jobs at scale. For orchestration, use managed services like AWS MWAA (Managed Workflows for Apache Airflow) or GCP Cloud Composer.

You can access many of these tools for free. AWS offers free usage for S3 and Lambda, Snowflake provides a 30-day trial with $400 in credits, and BigQuery allows up to 1TB of queries per month at no cost. Mastering these platforms demonstrates your ability to build and deploy production-level pipelines.

Version Control and Documentation

Technical skills alone aren’t enough - strong version control and clear documentation are essential. GitHub is a must-have for showcasing your portfolio. But uploading code isn’t enough. Show professional practices like using descriptive commits (e.g., "fixed schema validation in Airflow DAG") instead of vague messages. Use feature branches to maintain a stable main branch. Pin your top 2–3 projects to your profile and leverage the Profile README feature to introduce your tech stack.

Your README files are just as important as your code. Hiring managers typically spend only 5–10 minutes reviewing a portfolio, so make your README count. Include a concise project summary, the technologies used, setup instructions, and - most importantly - quantify your results. For example, instead of saying "built a pipeline", write, "reduced processing time by 80% using Spark partitioning" or "processed 1 million records in under 2 minutes". Use tools like draw.io or Lucidchart to create architecture diagrams that visualize your data flow.

To ensure reproducibility, include a requirements.txt or Dockerfile in every repository. Organize your code into clean directories like /src, /data, /notebooks, and /tests to showcase a professional project structure. As Chris Garzon emphasizes:

"A well-crafted README file is your project's first impression. Think of it as the welcoming mat to your repository".

Checklist for Selecting Portfolio Projects

The projects you include in your portfolio can either showcase your expertise or fall flat. Since hiring managers typically spend just 5–10 minutes reviewing portfolios, every project needs to prove that you can tackle complex, real-world challenges - not just follow step-by-step tutorials. Choose projects that highlight both your technical skills and your ability to build solutions that are practical and ready for production. Here's how to ensure your portfolio projects stand out.

Use Complex, Messy Datasets

Skip the overly simplistic datasets like Titanic or Iris. These datasets might work for beginners, but they don’t reflect the messy, unpredictable nature of real-world data. Real data engineering involves working with datasets full of problems - missing values, duplicate records, schema mismatches, and late-arriving data. Think about scraping data from APIs with inconsistent JSON responses, handling logs with irregular timestamps, or merging data from sources with conflicting schemas.

As Saurabh Garg from Techno Devs explains:

"Real data engineering lives in the mess - not in clean tutorials".

Showcase your ability to handle these challenges by using tools like Great Expectations to validate data quality. In your README file, detail how you identified and resolved issues like missing or inconsistent data. This approach demonstrates that you understand clean data is a rarity and know how to work through the chaos. Once you’ve tackled data quality, take it further by building comprehensive, end-to-end pipelines.

Build Complete Data Pipelines

Your projects should demonstrate the entire data lifecycle: from ingesting data via APIs, web scraping, or SQL databases, to transforming it with tools like Apache Spark or dbt, to storing it in cloud platforms like Snowflake or BigQuery. Orchestrate the entire process with tools like Apache Airflow.

Make sure to include features like error handling, logging, and automated retries. These details show that your pipelines are designed to handle failures - because, let’s face it, real-world pipelines crash.

Quality over quantity matters here. A single, well-documented project that tackles production-level challenges is far more impressive than multiple shallow examples. Use your README to focus on how the project solves real-world problems, not just a list of technologies you used. As Saurabh Garg points out:

"Hiring managers are not impressed by pipelines. They are impressed by thinking".

Use Multiple Tools and Technologies

Don’t box yourself into using just one tool. A strong portfolio showcases how you combine various modern technologies to solve practical problems. For example:

- Use dbt to design dimensional models and apply ELT best practices.

- Leverage Apache Spark (or PySpark) to process large datasets with partitioning and parallelization.

- Add Great Expectations to validate data quality and prevent errors from propagating.

- For real-time projects, integrate tools like Apache Kafka or AWS Kinesis to demonstrate event-driven architectures.

Take it a step further by containerizing your pipeline with Docker and automating deployments using GitHub Actions. These additions show that your solutions are ready for production and scalable. Combining multiple tools in a single project highlights your ability to build robust systems while mastering implementation and deployment challenges by earning a data engineering certification.

Implementation and Deployment Best Practices

A professional portfolio isn’t just a list of projects; it’s a showcase of your ability to implement, deploy, and maintain production-level solutions. The way you approach implementation and deployment can reveal whether you’re presenting polished, production-ready code or simply following tutorials. With fewer than 1 in 10 junior data engineering candidates submitting a portfolio alongside their application, demonstrating professional implementation practices can immediately set you apart.

Write Clean, Modular Code

Long, tangled scripts with hundreds of lines are a red flag. Instead, structure your code into logical modules. Separate key functions like data ingestion, transformation, and validation into distinct components. This approach makes your code easier to test, maintain, and reuse. Stick to PEP 8 standards, use tools like Black for formatting, and include essential files like requirements.txt or pyproject.toml to ensure reproducibility.

Don’t forget the details that make your code production-ready. Add error handling and logging using Python’s logging module to demonstrate professionalism. As Chris Garzon from Data Engineer Academy puts it:

"A well-crafted portfolio that demonstrates real problem-solving with modern data tools can instantly catapult you into that top 10% of candidates who get remembered (and interviewed)".

Once your code is clean and modular, take the next step: deploy it in real-world environments.

Deploy Projects to Cloud Platforms

Avoid keeping your portfolio projects confined to your local machine. Deploy them to major cloud platforms to demonstrate your ability to work with scalable, real-world tools. Use infrastructure-as-code (IaC) tools like Terraform or AWS CloudFormation to ensure repeatability and showcase modern deployment practices. Connecting your cloud projects to GitHub also highlights your understanding of version control workflows.

For intermediate-level projects, try serverless architectures using AWS Lambda, API Gateway, and DynamoDB. These designs emphasize scalability and low maintenance. Enhance your portfolio further by including architecture diagrams in your README. Tools like draw.io or Lucidchart can help you visually explain your data flow - from data sources to staging zones, through transformations, and into your data warehouse. Be sure to document the cloud services you used and any challenges you tackled, like cutting storage costs by 15% or reducing processing time by 20%.

To stand out even more, set up a CI/CD pipeline using GitHub Actions or AWS CodePipeline. This shows you can automate the build, test, and deployment process whenever changes are pushed to your repository.

Automate and Monitor Workflows

Automation and monitoring are essential for production-grade solutions. Use orchestration tools like Apache Airflow or Prefect to automate your pipelines. Set up alerts - via email, Slack, or Prometheus - to monitor performance and detect failures. For cloud-based projects, managed services like Google Cloud Composer or AWS Managed Workflows for Apache Airflow can simplify orchestration.

Adding an Airflow DAG (Directed Acyclic Graph) to your portfolio can demonstrate your ability to automate workflows at scale. For streaming pipelines, monitor metrics like system latency and data freshness to ensure reliability. Explicitly document your failure recovery process, such as: “Airflow retries this task three times before sending an alert.” This highlights your focus on building robust systems.

To validate data quality, integrate tools like Great Expectations or dbt tests. Include a Dockerfile so hiring managers can quickly reproduce your environment. With hiring managers spending just 5–10 minutes reviewing a portfolio, clear documentation of your automation and monitoring setups can make your operational skills shine.

How to Present and Improve Your Portfolio

Once you've built your technical projects, the next step is showcasing them in a way that grabs the attention of hiring managers. Your portfolio should be easy to navigate, visually appealing, and leave a lasting impression.

Polish Your LinkedIn and GitHub Profiles

Think of your GitHub profile as your technical portfolio. Start with a professional username and create a Profile README that serves as your introduction. Highlight your tech stack - whether it’s SQL, Python, Apache Spark, or Snowflake - and link to your standout projects. Pin 2–4 repositories that show a mix of skills, like building batch pipelines, working with streaming analytics, or managing cloud data warehousing.

On LinkedIn, use the Featured section to link directly to your GitHub projects. Show off certifications, such as AWS Certified Solutions Architect, to underline your expertise. A strong contribution graph with regular commits signals your active engagement in the field. You can also use the platform to highlight your most impactful projects, creating a seamless lead-in to your capstone work.

Highlight Capstone Projects and Certifications

Quality trumps quantity when it comes to projects. One well-thought-out, detailed capstone project can make a bigger impact than several smaller demos. Focus on projects that showcase your ability to create end-to-end pipelines for complex, real-world scenarios. For instance, imagine a retail streaming analytics project completed in January 2026. It could include Apache Kafka for data ingestion, Spark Structured Streaming for real-time metrics, Snowflake for data storage, dbt for aggregation, and a Streamlit dashboard for inventory alerts. This kind of project demonstrates your ability to handle production-level workflows.

Certifications also play a key role in validating your skills. Entry-level data engineers with a strong portfolio and relevant certifications can command six-figure salaries. To further prove your expertise, include elements like Entity Relationship Diagrams (ERDs) or descriptions of dimensional modeling techniques, such as star schemas, to illustrate your understanding of data design.

Leverage DataExpert.io Academy

DataExpert.io Academy offers a range of resources to help you build a competitive portfolio. With boot camps and subscription plans, you gain access to hands-on training, industry tools like Databricks, Snowflake, and AWS, and even guest speaker sessions. These programs provide lifetime access to content, ensuring you have the tools and knowledge to create the portfolio elements hiring managers are looking for most.

Conclusion: Creating a Portfolio That Gets Noticed

Focus on showcasing two to three projects that highlight your ability to build complete pipelines, use modern tools, and achieve measurable results. Each project should include clear architecture diagrams, clean and readable code, effective error handling, and quantifiable outcomes. This demonstrates to hiring managers that you can deliver real business value.

By emphasizing depth over quantity, your portfolio will not only stand out visually but also present a cohesive narrative of your technical choices. Make sure your documentation grabs attention right away. Summarize your decisions and results clearly, showing your problem-solving skills and engineering judgment.

"One deep, well-documented project that mimics a real data pipeline is worth more than five shallow demos."

- Chris Garzon, Founder, Data Engineer Academy

Keep your portfolio dynamic and current. Update it every 3–6 months with new certifications, achievements, or projects that align with the latest trends. For example, incorporating AI integration or real-time streaming can showcase your ability to adapt to emerging technologies. Pairing cloud certifications with hands-on projects is especially powerful - it proves not just your knowledge but also your ability to apply it effectively.

To help you build a standout portfolio, DataExpert.io Academy offers hands-on boot camps and capstone projects. Their programs in data engineering, analytics engineering, and AI engineering provide access to industry-standard tools like Databricks, Snowflake, and AWS. With practical experience, you'll create projects that truly differentiate you in a competitive job market.

FAQs

What are 2–3 portfolio projects that hiring managers actually care about?

Hiring managers appreciate projects that showcase skills in real-time streaming with tools like Kafka and Snowflake, ETL workflows using platforms like Airflow and Databricks, and data modeling. These examples highlight your ability to handle practical, hands-on data challenges with precision.

How can I deploy a cloud data pipeline without spending much money?

To build a budget-friendly cloud data pipeline, start by using free or low-cost options such as open-source tools and cloud provider free tiers. These can help you get started without significant upfront investment. Make sure to design workflows that are scalable and use resources efficiently, as this will help keep costs under control as your data needs grow. Finally, fine-tune your pipeline to ensure it only consumes the resources that are absolutely necessary, avoiding any extra expenses.

What should my GitHub README include to get interviews?

Your GitHub README is your chance to make a strong first impression. It should give a clear picture of who you are, spotlight your skills, and summarize your experience.

When it comes to projects, don’t just list them - tell a story. For each one, explain the problem you tackled, how you approached it, and the tools or technologies you used. This approach not only highlights your technical abilities but also shows potential employers how you think and solve problems. It’s a simple way to make your expertise stand out.