Polyglot Persistence: Database Per Service Pattern

Using different databases for different needs can make your application faster, more scalable, and easier to manage. This is the core idea behind polyglot persistence and the database per service pattern in microservices. Here’s how it works:

- Polyglot persistence means using multiple database types (e.g., relational, document, graph) to handle specific data requirements.

- The database per service pattern ensures each microservice has its own database, preventing shared dependencies and increasing system stability.

- Examples: Netflix uses Cassandra for high-volume writes and MySQL for transactions.

Why it matters:

- Scalability: Each service scales its database independently.

- Service Independence: Changes in one service don’t affect others.

- Optimized Databases: Use the best database type for each service (e.g., Redis for caching, MongoDB for flexible data).

However, this approach increases complexity. Teams must manage multiple database technologies and handle challenges like eventual consistency and data synchronization. To make it work, start with general-purpose databases and introduce specialized ones only when needed. Tools like the Saga pattern and API Composition can help manage distributed data.

Why Do Microservices Need Polyglot Persistence? - Cloud Stack Studio

Benefits of the Database Per Service Pattern

The database per service pattern offers the flexibility to scale services independently, maintain autonomy, and choose the best database for specific needs.

Better Scalability and Performance

With this approach, each microservice can scale its database independently, adapting to its unique demand. For instance, during high-traffic events like Black Friday, you could scale a Redis-backed shopping cart service separately from a PostgreSQL-based order management system. Mehmet Ozkaya, a software architect, highlights this advantage:

Since each microservice and its database are separate, they can be scaled independently based on their specific needs.

This separation also minimizes resource contention. In a shared database setup, a long-running analytics query could slow down customer-facing transactions by locking critical tables. Independent databases prevent such interference - heavy queries in one service won’t impact another’s performance. Additionally, high-throughput services can implement specific sharding or replication strategies without disrupting others.

| Database Type | Performance Use Case | Example Service |

|---|---|---|

| Relational (PostgreSQL/MySQL) | Transactional support | Order Management |

| Document (MongoDB) | High-volume reads and flexible JSON structures | Product Catalog |

| Key-Value (Redis) | Low latency for frequent read-write operations | Shopping Cart |

| Graph (Neo4j) | Efficiently querying complex relationships | Social Graph/Recommendations |

| Search Engine (ElasticSearch) | Optimized text-based search | Search Service |

This setup not only boosts performance but also strengthens the independence of individual services.

Service Independence and Reduced Dependencies

By decoupling services, the database per service pattern eliminates the need for cross-team coordination when making schema changes. For example, the inventory team can modify its database schema without waiting for approval from other teams.

This design also isolates faults. If one service encounters a database issue, it won’t cascade into a system-wide failure. As Chris Richardson, a software architect, explains:

The service's database is effectively part of the implementation of that service. It cannot be accessed directly by other services.

This autonomy ensures that each service operates independently while maintaining its own database.

Custom Database Solutions for Each Service

The pattern’s flexibility allows teams to select databases tailored to their service’s unique requirements. Chris Richardson notes:

Each service can use the type of database that is best suited to its needs. For example, a service that does text searches could use ElasticSearch. A service that manipulates a social graph could use Neo4j.

A great example of this is AWS’s modernization reference architecture from February 2026. In this setup, Amazon Aurora supports the Sales service for transactional data, Amazon DynamoDB handles NoSQL key-value operations for the Customer service, and Amazon RDS for SQL Server meets the Compliance service’s regulatory needs. This flexibility ensures that every service uses the most effective tool for its specific workload, avoiding a one-size-fits-all approach.

Database Types Used in Polyglot Persistence

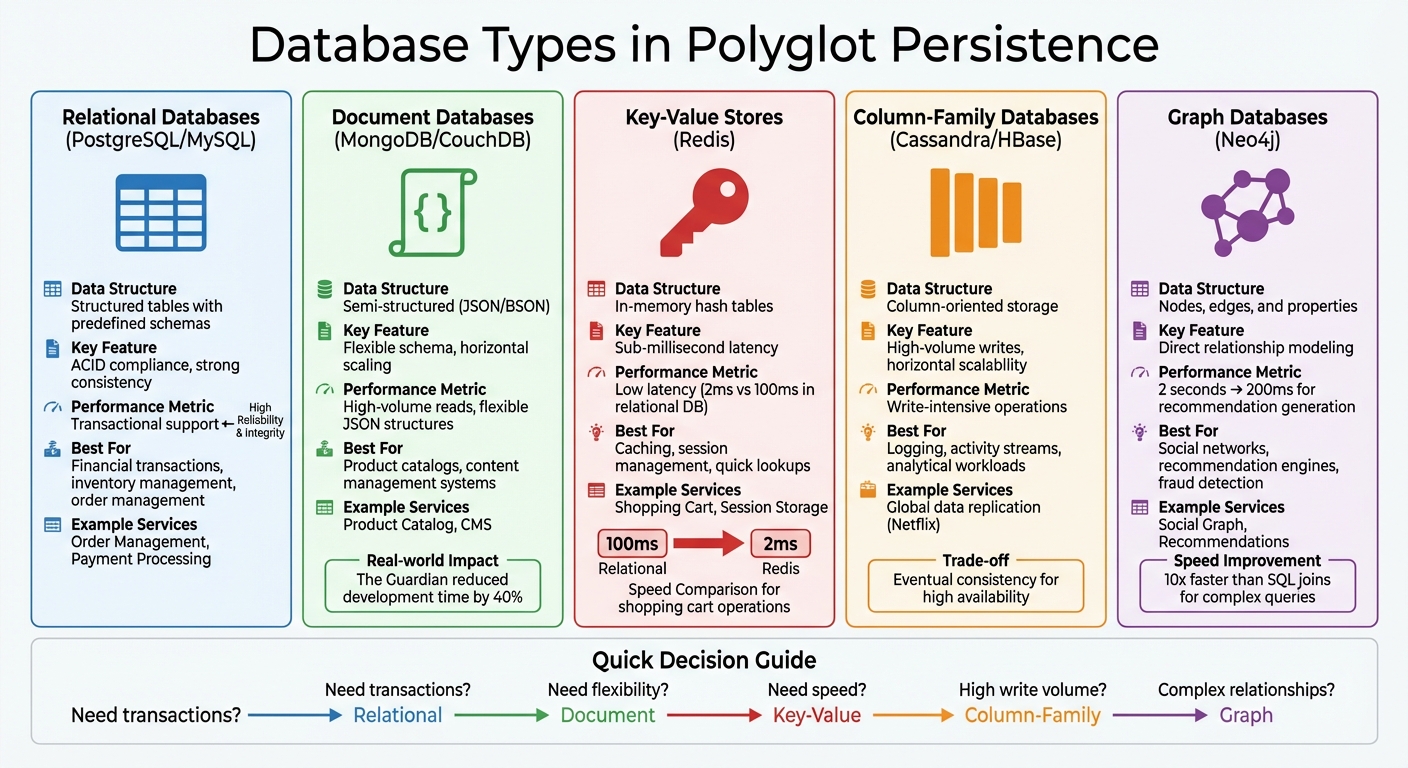

Database Types in Polyglot Persistence: Use Cases and Performance Comparison

Polyglot persistence involves using various database types - relational, document, key-value, column-family, and graph - to handle distinct data patterns and performance needs within microservices architectures. Each database type shines in specific scenarios. For example, relational databases manage structured data with complex relationships, while document databases handle semi-structured data flexibly. Key-value stores excel in quick lookups, column-family databases are built for heavy write operations, and graph databases are perfect for navigating complex relationships. As Martin Fowler, Chief Scientist at ThoughtWorks, puts it:

The relational option might be the right one - but you should seriously look at other alternatives.

Here’s a closer look at these database types and their ideal use cases.

Relational Databases (PostgreSQL)

Relational databases store data in structured tables with predefined schemas and are known for their ACID compliance, ensuring strong consistency. They are a go-to option for scenarios demanding reliability, such as financial transactions or inventory management. PostgreSQL and MySQL are widely used examples, offering transactional integrity for critical operations like payment processing. Their robust structure ensures that even the most complex operations are handled with precision and consistency.

Document Databases (MongoDB)

When flexibility in data models is key, document databases step in. They store data in semi-structured formats like JSON or BSON, allowing developers to adapt and evolve models easily. This makes them an excellent choice for applications like product catalogs or content management systems. MongoDB and CouchDB, for instance, support horizontal scaling, enabling data to be distributed across servers seamlessly. A notable success story comes from The Guardian, which transitioned to MongoDB in 2011 for its content management system. This switch not only boosted productivity but also reduced development time by approximately 40%.

Key-Value Stores (Redis)

Key-value stores are designed for speed, offering sub-millisecond latency by storing data in memory. They act as high-speed hash tables, making them ideal for caching, session management, and quick lookups. For example, shopping cart operations that might take 100 ms in a relational database can be reduced to just 2 ms with Redis. Companies like Netflix use Redis, integrated with their Dynomite layer, to ensure high-speed caching across their microservices. However, since they often lack strong consistency guarantees, they’re best suited for ephemeral or easily regenerable data.

Column-Family Databases (Cassandra)

Column-family databases excel in handling high-volume writes and managing large datasets. They organize data by columns, making them well-suited for write-intensive tasks like logging, activity streams, and analytical workloads. Cassandra and HBase are popular examples, offering horizontal scalability and partition tolerance. Netflix, for instance, uses Cassandra to manage global data replication efficiently across its microservices architecture. While these databases often trade immediate consistency for high availability, they offer tunable consistency options to meet specific needs.

Graph Databases (Neo4j)

Graph databases are built to manage data as nodes, edges, and properties, making them perfect for navigating intricate relationships. They’re widely used in applications like social networks, recommendation engines, and fraud detection. A standout example is how recommendation generation times dropped from 2 seconds using SQL joins to just 200 ms with Neo4j’s graph traversals. This performance boost comes from their ability to directly model and query relationships, avoiding the complexity of multiple SQL joins.

How to Implement the Database Per Service Pattern

Implementing the database per service pattern involves careful planning in three key areas: defining service boundaries, choosing the right database technologies, and managing data consistency. These decisions determine how well your services can operate independently and how scalable your architecture becomes.

Defining Service Boundaries

The first step is breaking your application into distinct business capabilities. Think of functions like "Order Management" or "Product Catalog." Each microservice must fully own its database, with no direct access allowed from other services. This separation should be enforced with technical safeguards. As Chris Richardson, Software Architect and Author, points out:

Keep each microservice's persistent data private to that service and accessible only via its API.

To ensure this privacy, assign a unique database user ID to each service and use database permissions to limit access. If maintaining separate databases for every service feels overwhelming, a schema-per-service approach within a shared database server can be a practical compromise. Each service should clearly own specific data entities, like the Product Service managing catalog tables or the Order Service handling order-related data. This clear ownership prevents conflicts and ensures that each service acts as the authoritative source for its domain. With well-defined boundaries, services can remain isolated, minimizing the impact of issues.

Once boundaries are established, the focus shifts to selecting the best database technology for each service.

Selecting the Right Database Technology

Using a polyglot persistence strategy allows you to match each service with the database technology that best suits its needs. Jeff Carpenter, Technical Evangelist at DataStax, highlights this flexibility:

The appeal of microservices architecture lies in the ability to develop, manage and scale services independently. This gives us a ton of flexibility in terms of implementation choices, including infrastructure technology such as databases.

Start with a general-purpose database and only introduce specialized data stores when absolutely necessary. For example, you can reduce the strain on your primary database by implementing read-write separation, where read traffic is directed to replicas. Sticking to a single data model for each service also helps avoid unnecessary complexity.

Managing Data Synchronization and Consistency

Once you've chosen your database technologies, maintaining synchronization and consistency across services becomes critical. Since traditional ACID transactions don't work across multiple databases, alternative approaches are needed. The Saga pattern is one solution, breaking distributed transactions into smaller, local steps with compensating actions to handle failures. For queries that span multiple services, CQRS (Command Query Responsibility Segregation) can help by separating read and write operations and keeping materialized views updated through events.

While database isolation boosts service independence, it does come with added challenges. You'll need to manage diverse database technologies, backups, and monitoring strategies across your system. Balancing these complexities is key to successfully implementing the database per service pattern.

sbb-itb-61a6e59

Challenges and Trade-offs of Polyglot Persistence

Polyglot persistence sounds appealing, but it comes with its own set of challenges. The added complexity, consistency issues, and operational demands can easily overwhelm teams without proper preparation. Let’s break down the key hurdles that come with adopting this approach.

Higher Operational Complexity

Using multiple database technologies isn’t as simple as it sounds. Each type of database brings its own requirements for installation, configuration, monitoring, backups, and security. For DevOps teams, this means juggling a variety of tools and expertise. In fact, over 60% of microservices in production rely on multiple database types. This creates a critical need for teams to master both SQL and NoSQL systems.

But here’s the catch: every database you add increases the risk of something going wrong. Twain Taylor, from Twain Taylor Consulting, highlights this issue:

The heightened level of attention required by a distributed data storage approach also risks placing a heavy burden on database administrators -- not to mention the extra financial cost imposed by multiple database licensing and maintenance costs.

On top of that, provisioning separate servers for each database can get expensive and complicated. A practical workaround? Stick to a pre-approved set of database technologies instead of allowing endless choices.

Data Consistency and Synchronization Problems

Keeping data consistent across multiple databases is no small feat. Traditional ACID transactions don’t work well across different types of databases, forcing teams to rely on eventual consistency models. Chris Richardson, a software architect, explains it best:

Distributed transactions are best avoided because of the CAP theorem. Moreover, many modern (NoSQL) databases don't support them.

The CAP theorem limits distributed systems to balancing availability and partition tolerance, often at the expense of strong consistency. This means teams must manage synchronization manually, especially when data is duplicated across services for better performance.

Then there’s replication lag - a common issue when using read-only replicas or caching systems. The delay between updates in the primary database and reflected changes in read models can lead to users seeing outdated data if the system isn’t designed to handle these delays properly.

Comparison: Monolithic Database vs. Polyglot Persistence

To better understand the trade-offs, here’s a side-by-side comparison of monolithic databases and polyglot persistence. This highlights the operational and consistency challenges discussed above, along with their respective benefits.

| Feature | Monolithic Database | Polyglot Persistence |

|---|---|---|

| Scalability | Primarily vertical; limited horizontal scaling | Independent, targeted horizontal scaling per service |

| Coupling | Tight; services coupled via shared schema | Loose; services interact only via APIs |

| Operational Complexity | Low; single technology and skill set | High; requires expertise in multiple database types |

| Data Consistency | Strong; immediate ACID guarantees | Eventual; managed via Sagas and events |

| Data Integrity | Enforced by database (foreign keys, joins) | Enforced by application (Sagas, API joins) |

| Maintenance | Simple backups and recovery | Complex; point-in-time recovery becomes difficult |

Monolithic databases shine with their simplicity and strong consistency, but they struggle with scalability and flexibility. On the other hand, polyglot persistence allows for tailored optimization and independent scaling, but it comes with steep operational demands and consistency trade-offs. As Festim Halili from the University of Tetova puts it, "Polyglot persistence increases adaptability / performance / domain alignment but also governance / operational complexity".

Examples and Case Studies

Case studies provide a clear picture of how the database per service pattern can enhance scalability and resilience across industries.

E-commerce Platforms

Walmart Global Tech offers a standout example of the database per service pattern in action. In March 2024, Senior Software Engineer Piyush Shrivastava shared insights into their approach for managing the Orders flow. They separated the Item Service (handling item costs and seller data) from the Inventory Service (tracking stock levels), each with its own independent database. This separation avoids cascading failures and eliminates the need for slow multi-table joins, leading to improved performance and system resilience. To integrate data when necessary, Walmart relies on API composition.

The database per service pattern suggests having independent, scalable and isolated databases for each microservice instead of having a common datastore. Each service should independently access its own DB, services cannot access each other's DBs directly. - Piyush Shrivastava, Senior Software Engineer, Walmart Global Tech

Netflix takes a similar approach by using specialized databases tailored to different workloads. For instance, Cassandra handles high-volume write operations, MySQL manages transactional data, EVCache ensures low-latency reads, and Elasticsearch supports search and analytics. Between 2017 and 2018, Netflix faced challenges with its Viewing History service due to wide rows in Cassandra, which caused heap pressure and latency issues. To address this, they restructured the data model into two column families: a "live" family for recent writes and a "roll-up" family for compressed historical data. They also replaced Cassandra with Dynomite (a Redis-based solution) for distributed queue management, reducing operational complexity.

These strategies are not limited to retail. Social media platforms have also adopted similar methods to enhance performance.

Social Media Applications

Social platforms like LinkedIn and Netflix have embraced these strategies to handle immense amounts of social data effectively.

LinkedIn processes billions of requests daily by distributing its data across specialized systems. For primary online storage, they use Espresso, while Voldemort (a distributed key-value store) handles high-throughput workloads. Additionally, Kafka is employed for streaming data. This architecture enables LinkedIn to efficiently support graph-based social connections and search functionalities while ensuring high availability.

Netflix also leverages polyglot persistence to optimize its content platform. For example, EVCache provides read and write latencies of less than 1ms for critical services like CDN URL generation. Meanwhile, Elasticsearch and Kibana enable near-real-time log analysis, significantly improving incident resolution times - from over two hours to under ten minutes for playback errors. These advancements support Netflix's massive user base of over 109 million subscribers across 190 countries.

By providing polyglot persistence as a service, developers can focus on building great applications and not worry about tuning, tweaking, and capacity of various back ends. - Roopa Tangirala, Engineering Manager, Netflix

Best Practices and Decision Framework

Building on the challenges and strategies discussed earlier, here’s a guide to implementing polyglot persistence effectively.

Best Practices for Database Per Service

At the heart of the database-per-service pattern lies data encapsulation - each microservice should own its data and make it accessible exclusively through its API. Skipping this principle can lead to hidden dependencies that ultimately dismantle the architecture.

Without some kind of barrier to enforce encapsulation, developers will always be tempted to bypass a service's API and access its data directly. - Chris Richardson, Author of Microservices Patterns

To ensure modularity, rely on more than just documentation. Use technical safeguards like assigning each microservice its own database user ID with specific permissions or leveraging cloud-native IAM policies. When it comes to isolation, you have a few options: private tables for minimal overhead, private schemas for a balanced approach, or fully dedicated database servers for maximum separation. A good starting point is using schemas, as they offer clear data ownership without overwhelming operational costs.

For local transaction consistency, adopt the Saga pattern. For cross-service queries, consider API Composition or CQRS. To cut down on expensive cross-service calls, use the Materialized View Pattern, which duplicates data strategically and improves system resilience.

Operational complexity is inevitable, so automate backups, monitoring, and deployments for all databases. Shift your mindset from immediate consistency to eventual consistency, using asynchronous messaging to sync data across services.

Once these best practices are in place, use the decision framework below to adapt polyglot persistence to your unique business needs.

Decision Framework for Polyglot Persistence

Polyglot persistence offers scalability and independence, but it’s not a one-size-fits-all solution. Start by assessing your team’s size and scaling needs. For small teams or startups, sticking with a monolithic database is often more practical. Only move to the database-per-service model when the benefits of loose coupling and independent scaling outweigh the added complexity.

The first step is to identify your data domains and their specific requirements. Does your data require transactional integrity, need to support flexible content, handle session data, or model complex relationships?. Match each domain to the way its data is accessed - whether it’s full-text search, graph traversal, or high-volume writes. Use the CAP theorem to narrow down the two most critical needs for each service: consistency, availability, or partition tolerance.

Begin with a general-purpose database like PostgreSQL and integrate specialized databases only when necessary. Assign clear ownership to ensure every piece of data has a single authoritative source, avoiding synchronization issues. Plan for graceful degradation in case one database in your ecosystem fails. Unified monitoring and logging across all database types are essential for spotting bottlenecks.

Apply Polyglot Persistence. Let each team choose their own persistence mechanism (perhaps from a pre-selected set) that is appropriate to their particular business problem and implementation challenges. - Cloud Adoption Patterns

Finally, document your database decisions for each domain. This ensures that institutional knowledge isn’t lost over time.

Conclusion

The database per service pattern plays a key role in keeping microservices architectures loosely coupled. By giving each service complete ownership of its data and limiting access to it through APIs, you avoid the tight dependencies often found in monolithic systems. This separation allows teams to work independently, enabling faster development, deployment, and scaling without interference from shared schemas or other teams.

Another crucial aspect is selecting the right database technology for each service. This idea, known as polyglot persistence, lets services choose the database that best fits their specific needs - like PostgreSQL for transactional data, Redis for caching, or Neo4j for social graphs. This approach avoids the pitfalls of trying to make a single database handle every possible workload. As metapatterns.io aptly puts it:

It is impossible for a single database to be good at everything.

However, there are trade-offs. Shifting from immediate ACID compliance to eventual consistency introduces complexity, requiring patterns like the Saga pattern and tools like API Composition or CQRS for data aggregation. Additionally, managing multiple database systems brings operational challenges, including varied backup, monitoring, and scaling procedures.

To strike a balance, start with a versatile database like PostgreSQL and introduce specialized databases only when the benefits outweigh the added complexity. Maintain modularity with strict database-level access controls - not just documentation - and consider strategies like materialized views to minimize cross-service calls. Before fully adopting this pattern, ensure your team is equipped to handle the demands of managing multiple database technologies.

At its core, the database per service pattern isn't about using every database technology available. It's about giving each service the independence and adaptability it needs to grow and evolve without becoming a bottleneck.

FAQs

What challenges come with using polyglot persistence in microservices?

Implementing polyglot persistence in microservices comes with its fair share of challenges, largely due to the intricacies of managing multiple database types. Since each database is tailored to specific use cases, teams need to develop expertise across a variety of technologies. This, in turn, complicates maintaining data consistency and ensuring smooth synchronization between services.

One of the trickiest hurdles is preserving data integrity when transactions involve multiple databases. Coordinating updates across different systems often calls for advanced architectural patterns like event sourcing or CQRS. On top of that, accessing and aggregating data from various sources can make application logic more complex and potentially affect performance.

Although polyglot persistence offers the advantage of tailoring databases to specific data needs, it requires meticulous planning, skilled developers, and a well-designed infrastructure to manage these challenges efficiently.

How does the database per service pattern improve scalability in microservices?

The database per service pattern boosts scalability by giving each microservice its own independent database. This setup allows services to scale their databases according to their unique workloads without interfering with others. The result? Reduced bottlenecks and better use of resources.

Separating databases also means each service can choose the database technology that best fits its specific requirements. This flexibility enhances performance and supports smooth adjustments as demands evolve.

When is it a good idea to use the database per service pattern?

The database per service pattern is a practical solution when your team requires independent development, deployment, and scaling for microservices. It’s particularly effective when each microservice has distinct data needs and can benefit from a database designed specifically for its requirements.

This pattern also encourages loose coupling between microservices, which makes the system more resilient and easier to maintain. By letting each service manage its own data, teams can sidestep bottlenecks and achieve better performance and scalability throughout the system.