Ultimate Guide to AI Engineering Portfolios

Want to land an AI engineering job in 2026? Your portfolio is more important than your resume.

By 2026, the AI job market is more competitive than ever, with job postings tripling but applications surging tenfold. Portfolios showcasing measurable results and end-to-end projects are now the key to standing out. Recruiters spend less than 10 seconds on resumes but engage 80% more with GitHub projects featuring runnable code or live demos.

Here’s what makes a winning AI portfolio:

- 3–5 polished projects demonstrating skills across the AI lifecycle (data collection, deployment, monitoring).

- Focus on solving practical problems with tools like PyTorch, Hugging Face, and LangChain.

- Include live demos using Streamlit or Gradio and clear documentation with measurable outcomes (e.g., "improved F1 score by 22%").

- Tailor projects to your career level: beginners should focus on clean implementations, while advanced engineers should highlight scalable, business-driven solutions.

In 2026, portfolios are the proof employers need. Whether you're targeting entry-level or senior roles, your portfolio should demonstrate your ability to deliver production-ready AI systems that solve real challenges.

How to Build AI Engineering Projects That Get You Interviews

sbb-itb-61a6e59

Why AI Engineering Portfolios Matter in 2026

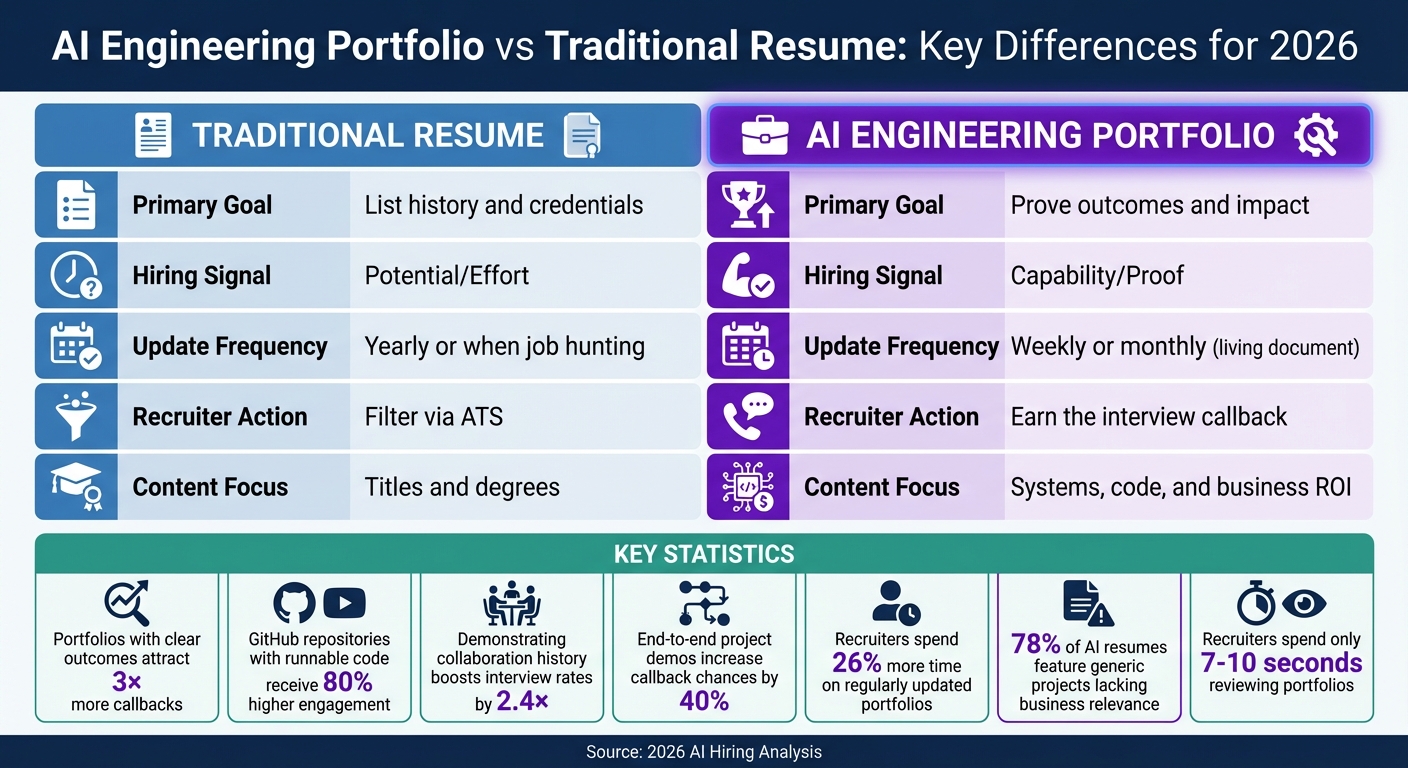

AI Engineering Portfolio vs Traditional Resume: Key Differences for 2026

The way candidates are evaluated in the AI job market has undergone a major shift. While resumes still help you get past Applicant Tracking Systems (ATS), it's your portfolio that can actually secure an interview callback. Degrees and certifications may act as initial filters, but they often fall short of showcasing the practical skills needed in such a fast-changing field.

The statistics back this up. Between 2021 and 2025, job postings requiring AI expertise increased 200 times. And according to the World Economic Forum, the demand for AI professionals is expected to outpace the available talent pool by 30–40% by 2027. In such a competitive market, generic resumes are losing their effectiveness.

Employers are increasingly prioritizing tangible results over credentials. Recruiters are now 50% more likely to search for candidates based on specific skills, with LinkedIn reporting a 25% rise in the use of skills-based filters since 2019. Portfolios have become the go-to way to showcase the type of systems thinking needed to integrate AI solutions and APIs - something that can't be conveyed by simply listing job titles or courses. This shift underscores why portfolios packed with real-world results are now essential for career growth.

How Portfolios Influence AI Hiring Decisions

In today’s hiring process, portfolios are valued for their ability to demonstrate real work rather than just listing credentials. Hiring managers want to see proof that you can handle the entire AI lifecycle - from gathering messy data and building AI-enabled data pipelines to designing models, integrating APIs, and deploying in the cloud. They’re looking for candidates who can show how AI components fit into larger systems and workflows. Additionally, clear documentation of decision-making - like how you balanced trade-offs or managed failures - can make a big difference.

The most impactful portfolios highlight measurable achievements, such as ROI improvements, efficiency boosts, or hitting specific KPIs. Here’s why this matters:

- Portfolios with clear outcomes attract 3× more callbacks.

- GitHub repositories featuring runnable Jupyter Notebooks or live API links receive 80% higher engagement from hiring managers.

- Demonstrating collaboration history can boost interview rates by 2.4×.

- Including end-to-end project demos increases callback chances by 40%.

Keeping your portfolio updated is equally important - recruiters spend 26% more time reviewing portfolios that are regularly refreshed.

However, not all portfolios hit the mark. A 2023 analysis of over 10,000 AI resumes revealed that 78% featured generic projects like MNIST or Titanic datasets, which lacked any business relevance. These portfolios often fail to grab attention, as recruiters typically spend just 7–10 seconds reviewing them. As Souradip Pal of Stackademic puts it:

"Recruiters don't hire potential - they hire proof".

This growing emphasis on practical, demonstrated skills makes it clear: portfolios need to reflect real-world AI challenges to stand out.

How Portfolios Open Career Doors

Portfolios showcasing measurable results don’t just influence hiring decisions - they also lead to better pay and opportunities. The shift toward skills-based hiring has expanded the talent pool by up to 20%, giving candidates who demonstrate working code and deployed systems a clear edge. Entry-level AI engineers in the U.S. can expect salaries between $95,000 and $120,000, while experienced professionals often earn $160,000 to $200,000 annually - proof of the high value placed on demonstrated abilities.

| Feature | Traditional Resume | AI Engineering Portfolio |

|---|---|---|

| Primary Goal | List history and credentials | Prove outcomes and impact |

| Hiring Signal | Potential/Effort | Capability/Proof |

| Update Frequency | Yearly or when job hunting | Weekly or monthly (living document) |

| Recruiter Action | Filter via ATS | Earn the interview callback |

| Content Focus | Titles and degrees | Systems, code, and business ROI |

Portfolios help reduce hiring risks by making technical skills both visible and verifiable through live demos and code repositories. As Ally Miller, an AI specialist, notes:

"AI is one of the few fields where ability is visible. You don't need permission, credentials, or a formal title to start building proof".

The industry is moving away from "model tinkerers" and "prompt engineers" toward professionals who can solve real business problems with production-ready AI solutions. Your portfolio serves as evidence of that capability, playing a pivotal role in securing opportunities in the competitive AI landscape of 2026.

For practical training and resources to build your portfolio, check out DataExpert.io Academy.

Building Your Portfolio Based on Experience Level

Your portfolio should reflect where you are in your AI career. The projects you include need to challenge you just enough to grow your skills while proving to recruiters that you can tackle practical problems. By 2026, recruiters are looking for real-world solutions that demonstrate your ability to integrate AI into broader systems, regardless of your experience level.

At every stage, your portfolio should highlight your ability to manage the entire AI lifecycle. Beginners should focus on clean, foundational implementations. Intermediate developers need to show they can handle messy, unstructured data and deployment issues. Advanced engineers must demonstrate they can design scalable systems that deliver measurable outcomes, with a growing emphasis on business impact as they progress.

Projects for Beginners

If you're just starting out, your projects should showcase your understanding of core AI concepts and your ability to create functional demos. Here's the key: only 10% of AI work is about algorithms. The rest involves working with data (20%) and connecting the system to tools, people, and processes (70%). Your projects should reflect this balance.

Begin with structured data projects using techniques like Linear Regression, Logistic Regression, Decision Trees, and K-means Clustering. For example, a customer segmentation project based on behavioral data can demonstrate your ability to identify patterns and explain clustering decisions. In Natural Language Processing (NLP), try building a sentiment analysis tool or a spam email classifier that handles tokenization, vectorization, and text preprocessing. These types of projects usually take two to three weeks to complete.

Computer vision projects are also essential. A simple handwritten digit recognizer using the MNIST dataset or a cats-vs-dogs image classifier can showcase your understanding of Convolutional Neural Networks and transfer learning. To stand out in 2026, consider adding a Retrieval-Augmented Generation (RAG) project, such as a PDF-chat application. This type of project shows you know how to integrate Large Language Models (LLMs) with vector databases and implement basic safeguards, like a 0.7 confidence threshold or retry logic for API calls.

The best beginner projects are specific and tailored. Instead of creating a generic "AI app", build something with a clear purpose - like a tool for a "bootcamp student struggling with SQL PDFs". Use a small, high-quality dataset of 5-10 PDFs rather than a massive, random collection.

Deploy your projects using tools like Streamlit or Gradio, and host them on platforms such as Vercel or Hugging Face Spaces. This allows recruiters to interact with your work live. Your README file should clearly outline the problem, explain your model architecture, and include measurable results - e.g., "improved F1 score by 22%". As Shin Bhide puts it:

"AI engineers aren't judged by degrees, they're judged by what they build".

For tools, start with GitHub, Hugging Face, and Google Colab, all of which offer free tiers suitable for beginners. A solid beginner project can typically be built in 10-20 focused hours on a laptop with at least 8 GB of RAM and Python 3.10+.

Projects for Intermediate Developers

At the intermediate level, your portfolio needs to show that you can go beyond experimentation. Recruiters want to see that you can handle the "hidden 90%" of AI work - things like data cleaning, implementing guardrails, and solving deployment challenges.

To stand out, focus on domain-specific projects. For example, instead of a basic sentiment analyzer, build a chatbot using Rasa or BERT, or create a text summarizer with Seq2Seq models. In computer vision, move beyond simple classifiers to tasks like inventory object detection with YOLOv8 or defect detection using U-Net. If you're interested in finance, consider fraud detection projects using Isolation Forest and SMOTE, or churn prediction models with XGBoost.

Intermediate developers should also focus on creating agentic workflows - systems where AI manages tasks like tool selection, state management, and error handling. A RAG-based system that compares the model's answers to retrieved chunks is a great way to show this capability. Use advanced metrics such as F1, AUC, and precision-recall, and incorporate explainability tools like SHAP to justify your model's decisions.

| Project Type | Beginner Level | Intermediate Level |

|---|---|---|

| NLP | Basic Sentiment Analyzer (scikit-learn) | Custom Chatbot (Rasa/BERT) or Text Summarizer (Seq2Seq) |

| Computer Vision | Simple Image Classifier (Transfer Learning) | Inventory Object Detection (YOLOv8) or Defect Detection (U-Net) |

| Finance | Customer Segmentation (K-Means) | Fraud Detection (Isolation Forest/SMOTE) or Churn Prediction (XGBoost) |

| Healthcare | Disease Prediction (Tabular Data) | Medical Image Classification (ResNet/DenseNet) |

Pick projects that address areas where you're less experienced. For instance, if you've never worked with containers, use Docker. If APIs are new to you, deploy a project using FastAPI. Document every step of the process, from data extraction to experiment tracking with tools like MLflow or Weights & Biases. Your README should also include baseline comparisons. Aim to demonstrate proficiency with LangChain, vector databases like ChromaDB or FAISS, Hugging Face Transformers, and deployment frameworks.

As Sammi Cox points out:

"Engineers who thrive with AI are the ones who treat reliability, monitoring, and safety as first-class features, not afterthoughts".

This foundation prepares you for advanced-level projects that focus on delivering measurable business results.

Projects for Advanced Engineers

At the senior level, your portfolio must showcase projects that solve real-world business problems. These projects should highlight your expertise in areas like multi-agent systems, real-time data streaming, and multi-modal analysis that combines text, image, and sensor data. The emphasis here is on complexity and business value.

For example, in March 2024, Zen van Riel, a Senior AI Engineer at GitHub, created a PDF Intelligence System using RAG architecture. It processed 100-page documents in just 30 seconds with 92% accuracy on factual questions, costing only $0.02 per document. This project played a key role in landing him a senior position at a major tech company. Another example is his Multi-Agent Customer Service System, which achieved a 65% automation rate in simulations of 1,000 conversations, potentially saving $500,000 annually at scale.

Advanced projects require end-to-end ownership - from data engineering pipelines to deployment and performance monitoring. Build systems capable of handling high volumes, like Zen van Riel's Real-Time Content Moderator, which analyzed over 1,000 items per minute with a false positive rate below 2%. Include architecture diagrams in your README to demonstrate senior-level design skills.

Avoid generic projects. Instead, focus on creating systems with real-world complexity. Examples include multi-agent systems with automatic escalation paths, medical diagnosis assistants, predictive maintenance for financial assets, or drone navigation systems using reinforcement learning. Emerging areas like multimodal learning - combining CNNs for images with Transformers for text - are also worth exploring. AI applications for sustainability, such as optimizing smart energy grids with deep reinforcement learning, are gaining traction.

Quantify your results with clear metrics, such as "reduced manual review time by 80%" or "achieved 99% accuracy in data extraction". A well-crafted portfolio can lead to job offers with salaries 30-40% higher than initial expectations, potentially adding over $50,000 annually for senior roles.

As Zen van Riel explains:

"My portfolio wasn't about showing I could code, it was about proving I could deliver business results through AI implementation".

For hands-on training and capstone projects that prepare you to build production-ready AI systems, check out DataExpert.io Academy.

Tools and Technologies for AI Engineering Projects

Your choice of tools speaks volumes about your readiness to tackle real-world AI challenges. By 2026, 78% of organizations will be using AI in at least one business function. Recruiters expect candidates to show proficiency with the latest frameworks, cloud platforms, and generative AI tools. The tools you master should align with your career aspirations - whether you aim for research, production engineering, or roles centered on generative AI.

The tools you pick also determine how efficiently you can create and deploy projects. More than 70% of hiring managers prioritize familiarity with deployment tools and cloud platforms like AWS, Azure, and GCP - even for entry-level roles. Your portfolio should demonstrate your ability to deliver complete AI systems, from data preparation to deployment and monitoring. Choosing tools that cover the entire AI lifecycle reinforces your ability to handle end-to-end projects, as discussed earlier.

Machine Learning and Deep Learning Frameworks

The frameworks you use help validate your technical expertise with industry-standard tools. PyTorch and TensorFlow dominate the deep learning space, each excelling in different areas. PyTorch, developed by Meta, is ideal for research and rapid prototyping due to its dynamic computational graph and Python-native design. It’s particularly strong in computer vision and reinforcement learning. TensorFlow, created by Google, shines in large-scale production, offering support for mobile (TF Lite), web, and cloud applications.

For classic machine learning tasks like regression or clustering, Scikit-learn remains the go-to framework. If you’re new to deep learning, Keras - now part of TensorFlow - provides a beginner-friendly API for building neural networks, especially for NLP and recommendation systems. For NLP-specific projects, Hugging Face Transformers is indispensable, offering pre-trained models compatible with both PyTorch and TensorFlow, saving you from building language models from scratch.

| Framework | Strength | Use Case | Developer Experience |

|---|---|---|---|

| PyTorch | Flexibility & Research | Computer Vision, Reinforcement Learning | High (Python-native) |

| TensorFlow | Production & Scalability | Enterprise Apps, Mobile/Edge, TPUs | Moderate (Improved with Keras) |

| Keras | Ease of Use | Simple Neural Networks, Fast Iteration | Very High (Beginner-friendly) |

| Scikit-learn | Classic ML | Tabular Data, Regression, Clustering | High (Standard API) |

Both frameworks are evolving: TensorFlow now supports dynamic execution like PyTorch, while PyTorch has enhanced its production tools like TorchServe. Your choice should align with your project’s goals - PyTorch for experimentation, TensorFlow for production stability, and Scikit-learn for traditional ML tasks.

Cloud Platforms and MLOps Tools

Operationalizing AI projects requires robust cloud and MLOps tools. Platforms like AWS SageMaker, Google Cloud Platform’s Vertex AI, Microsoft Azure ML, and Databricks offer end-to-end solutions, from data ingestion to model deployment. AWS SageMaker stands out for its governance features and templates, while GCP Vertex AI integrates seamlessly with Google’s ecosystem, including Gemini foundation models.

For tracking experiments, MLflow (especially version 3.x with GenAI features) is the industry standard. Released in mid-2025, it introduced tools for prompt tracing and evaluation using "LLM judges". Alternatives like Weights & Biases and Neptune.ai are also popular for logging hyperparameters, code commits, and model artifacts. According to AI researcher Rahul Kolekar:

"MLOps - the discipline of operationalizing machine learning - has matured into a core engineering function in 2026."

Workflow orchestration tools are equally important. Apache Airflow handles batch ETL processes, while Kubeflow Pipelines and Argo Workflows are Kubernetes-native options for containerized ML workflows. For model serving, tools like KServe, Seldon Core, and BentoML are widely used, while NVIDIA Triton excels in GPU-optimized inference.

Modern AI stacks also incorporate vector databases like Pinecone, Milvus, and Weaviate for managing embeddings in Retrieval-Augmented Generation (RAG). Feature stores such as Feast (open-source) and Tecton (enterprise) ensure consistent feature use across training and real-time inference, avoiding "training-serving skew". Implementing experiment tracking from the start ensures reproducibility and ties every model run to a specific code commit.

Generative AI and New Technologies

Generative AI tools have become essential for AI portfolios in 2026. Recruiters prioritize skills in LLMs and RAG architectures. Tools like LangChain and LlamaIndex streamline generative AI workflows, while vector databases (e.g., Pinecone, ChromaDB, FAISS, Milvus) power semantic search and retrieval.

Interactive demonstrations add significant value to your portfolio. Platforms like Streamlit and Gradio allow you to create live, interactive projects that recruiters can test. Deploy these projects on platforms like Vercel to make them accessible. For deployment, containerization tools like Docker and FastAPI help package models as APIs. As NeuraTech Academy puts it:

"A project that a recruiter can click and interact with is worth ten projects that live only in code."

Ethical AI practices are increasingly important. Showcase your commitment to responsible AI by using tools to detect hallucinations (semantic entropy) and monitor data drift (KS test, PSI). For LLMOps, platforms like Arize AI, WhyLabs, and Fiddler offer observability for prompt versioning, hallucination diagnostics, and token cost management. Treat prompts like application code by using version control systems to track changes and enable rollbacks. Incorporating AI-assisted development tools like Google AI Studio or Gemini further demonstrates your ability to work with modern coding tools.

For hands-on practice with tools like Databricks, Snowflake, and AWS - and capstone projects that prepare you for production-level AI systems - check out DataExpert.io Academy.

Choosing and Documenting Your Portfolio Projects

The projects you choose for your portfolio can make or break your chances with potential employers. They showcase whether your skills align with industry expectations. To start, narrow your focus: are you targeting research, engineering, or MLOps roles? Marina Wyss, an AI Engineering Coach and former Hiring Panelist at Amazon, emphasizes:

"These standard chatbot tutorials and RAG follow-alongs simply aren't complex enough, or close enough to what we do in industry to show employers you have the skills they need."

Choosing Projects That Match Your Career Goals

Your portfolio should reflect the specific demands of your desired role. For research-oriented positions, prioritize projects that demonstrate mathematical depth and innovative thinking. For instance, re-implementing a recent research paper from scratch is a great way to highlight your ability to work with complex architectures. Engineering roles, on the other hand, value fully operational, production-ready systems. If MLOps is your focus, projects should showcase skills like monitoring, containerization, and orchestration - using tools like Docker and FastAPI.

Projects that deal with messy, real-world data are especially valuable. They show you can overcome data preparation challenges and build systems that integrate multiple components. TalentSprint sums it up well:

"Your portfolio needs more than just AI projects - it needs to show real business results."

| Target Role | Project Focus | Key Technologies to Showcase |

|---|---|---|

| AI Specialist / Researcher | Novel architectures, methodology, bias/fairness research | PyTorch, TensorFlow, Research Papers |

| Machine Learning Engineer | End-to-end applications, model optimization, messy data | Scikit-Learn, Keras, HuggingFace |

| MLOps Engineer | Deployment, monitoring, system architecture, scalability | Docker, FastAPI, MLflow, Weights & Biases |

The next step is documenting these projects in a way that resonates with technical reviewers.

How to Document Projects Effectively

Clear documentation is critical. Start by stating the problem, listing your assumptions, and walking through your solution step-by-step. Separate technical details - like algorithms, experimental setups, and results - from your interpretations. As Xiao Wu, an ML Engineer, explains:

"Tech writing is a reflection of thought, not vocabulary."

Each project should include a detailed README file with setup instructions, an overview of the technology stack, and architectural diagrams. Use ADRs (Architectural Decision Records) to explain your technical choices. Document your iterations transparently, showcasing "before and after" versions of prompts or model adjustments to highlight your learning process.

Include objective metrics such as precision, recall, F1 scores, or latency improvements. A TL;DR summary at the top can grab attention quickly. Don't shy away from sharing failures and how you adapted - this shows resilience and problem-solving skills.

For a professional touch, use frameworks like Model Cards and Datasheets for Datasets to detail your model’s performance, biases, and data sources.

Once your projects are documented, focus on balancing variety and depth to create a well-rounded portfolio.

Balancing Variety and Depth in Your Portfolio

Aim for a mix of 3–5 smaller projects that showcase a range of techniques - like Gradient Boosted Trees, Neural Networks, and Clustering. Alongside these, dedicate significant effort to one or two complex, end-to-end systems. Egor Howell, a Machine Learning Engineer, puts it bluntly:

"A model in a Jupyter notebook has zero business value."

Your deeper projects should cover the full lifecycle, from data collection to API deployment. Production-ready projects should include features like prompt management systems, semantic search, multi-agent architectures, and robust monitoring dashboards. Show versatility by tackling projects across various domains - such as chatbot optimization or automated data extraction - and back them up with measurable metrics, like reducing response times by 35%.

Ultimately, your portfolio should demonstrate that you can link technical skills to business outcomes. For a hands-on way to build production-level AI systems that balance variety and depth, check out the AI Engineering Boot Camp offered by DataExpert.io Academy.

Presenting and Deploying Your Portfolio

Once your projects are documented, the next step is making them accessible and appealing to recruiters. Did you know that 87% of tech recruiters check GitHub profiles during hiring? And they spend just about 90 seconds per profile! This means your presentation needs to be sharp and professional to stand out. Below are strategies to ensure your portfolio highlights the technical depth and business impact of your work, grabbing the attention of hiring managers.

Organizing Your Portfolio on GitHub

Think of your GitHub profile as your technical resume. Start by adding a professional photo and crafting a concise bio that reflects your expertise in AI, such as "NLP Specialist" or "MLOps Engineer." Pin 4–6 of your best repositories at the top of your profile for easy access, and create a Profile README (a repository named after your username) to summarize your tech stack, highlight key projects, and outline your learning goals.

Each repository should include a clear README.md file with the following:

- A brief overview of the project

- Setup instructions for running the code

- Key metrics or results

- A link to a live demo, if available

Use clear and descriptive commit messages to show professionalism, and don't forget to include a .gitignore file to keep your repositories tidy. For security, always use environment variables for sensitive data like API keys instead of committing them directly.

Adding visuals like charts or screenshots to your README files can make your results more tangible. Repositories with runnable Jupyter Notebooks or live API links tend to see up to 80% higher engagement. Even if you're working solo, using features like branching and pull requests can demonstrate your understanding of collaborative workflows.

If you want to go beyond GitHub, creating a personal website can help showcase your projects in a more tailored and visually appealing way.

Building a Personal Portfolio Website

A personal portfolio website acts as your digital calling card, often making the first impression before an interview. Tools like GitHub Pages allow you to host static websites for free, and static site generators like Hugo can help you demonstrate skills in templating, Markdown, and CI/CD processes.

Focus on featuring 3–5 polished, end-to-end projects. When describing these projects, you can follow the STAR method (Situation, Task, Action, Result) or break it down into your role, the problem you solved, the solution you implemented, and the impact it had.

To make your site more accessible, shorten its URL using tools like Bitly and consider generating a QR code for inclusion on your physical resume. If you're not a frontend expert, tools like ChatGPT or Gemini can assist in customizing HTML5 templates to create a clean, professional design.

Deploying Projects for Live Demonstrations

Live demos bring your work to life, turning static code into interactive experiences that showcase your skills in action. To deploy your projects:

- Serialize your AI models using libraries like pickle or joblib for easy integration.

- Include a requirements.txt file with exact package versions (e.g.,

scikit-learn==1.2.1) to ensure smooth setup.

For quick prototypes, Streamlit is an excellent choice, and you can host non-commercial projects for free on Streamlit Community Cloud. If you’re working on backend applications, platforms like Railway offer flexible hosting options, including free tiers. These platforms often integrate with GitHub, enabling automatic redeployments whenever you push new code.

To make your demos accessible, add a link to the hosted application in both the "About" section of your GitHub repository and its README file. Before sharing, test your deployment by installing dependencies in a fresh environment (using pip install -r requirements.txt) to ensure everything works seamlessly.

For a deeper dive into deploying production-level AI systems, consider joining the Spring 2026 AI Engineering Boot Camp at DataExpert.io Academy.

Conclusion

By 2026, your portfolio needs to showcase more than just technical skills - it must prove you can solve real-world problems from start to finish. The demand for AI talent is skyrocketing, with AI-related job postings jumping 25.2% in Q1 2025 and 92% of executives planning to boost AI spending over the next three years. These roles, offering a median salary of $156,998, require more than theoretical expertise. Employers are looking for tangible proof of your abilities.

Focus on a handful of polished, end-to-end projects rather than a long list of basic experiments. Projects that highlight the entire AI workflow - from cleaning raw data to deploying production-ready systems - stand out far more than scattered tutorials. Tailor your efforts to align with your target role, whether it’s research, production engineering, or a specific industry like healthcare or finance. This approach not only highlights your technical skills but also your ability to deliver practical solutions.

Don’t forget to document your work clearly. Use concise READMEs and architecture diagrams to explain your projects. Include measurable results - like improved model accuracy or efficiency gains - to showcase your impact. With 78% of global companies now using AI in at least one business function, hiring managers are seeking professionals who grasp both the technical and ethical aspects of AI. Highlight your ability to address challenges like bias detection and model monitoring to demonstrate your awareness of responsible AI practices.

Finally, presentation matters. Recruiters often make decisions quickly, so a clean, professional layout can make a big difference. Use pinned repositories, live demos, and a personal website to present your work effectively. Starting with a 3-day MVP that addresses a single, real-world problem is a great way to build momentum, then scale up from there.

FAQs

What are the best 3–5 projects to include?

The best projects for an AI engineering portfolio demonstrate hands-on skills, problem-solving abilities, and expertise with tools. Here are a few examples:

- Designing comprehensive machine learning or deep learning models for tasks like image classification or speech-to-text applications.

- Constructing scalable data pipelines using platforms like AWS or Snowflake to handle large datasets efficiently.

- Developing deployable AI solutions, such as chatbots or recommendation engines, to showcase both technical knowledge and their potential impact on business outcomes.

How do I show measurable impact in my projects?

To demonstrate the impact of your AI projects, emphasize clear, measurable results that showcase your contributions. Use metrics such as model accuracy, precision, recall, or cost reductions to back up your claims. Highlight examples of solutions that address specific problems, whether through competition outcomes or production-ready applications. By providing concrete data and performance indicators, you can effectively illustrate your ability to deliver meaningful results and solve real challenges.

Should I build live demos or focus on GitHub?

GitHub is a must-have for any AI engineer looking to showcase their skills. It’s more than just a code repository - it’s a platform where you can demonstrate your expertise in coding, project execution, and teamwork. By maintaining repositories with runnable notebooks and well-written documentation, you make it easy for others (including recruiters) to explore your work.

Want to stand out even more? Add live demos or link them directly to your GitHub. These can give potential employers a firsthand look at your projects in action, showing off your technical abilities and real-time functionality. This isn’t just a great way to make your portfolio shine - it’s a practice that aligns with what the industry values most.